Web Scraping

Content

- Is It Ok To Scrape Data From Google Results? [Closed]

- Not The Answer You're Looking For? Browse Other Questions Tagged Web-scraping Or Ask Your Own Question.

- How To Scrape 1,000 Google Search Result Links In 5 Minutes.

- This Is The Best Way To Scrape Google Search Results Quickly, Easily And For Free.

Is It Ok To Scrape Data From Google Results? [Closed]

For convenience, here I will use the script in Colab Notebook to indicate how it works. It takes a search query from you, makes a request to the Google search engine, and retrieves the outcomes. The outcomes that it produces are mechanically saved in separate CSV, Excel, and JSON files. You can obtain that file on your PC and use that anywhere. Google is at present’s entry point to the world biggest useful resource – information.

Not The Answer You're Looking For? Browse Other Questions Tagged Web-scraping Or Ask Your Own Question.

Food And Beverage Industry Email Listhttps://t.co/8wDcegilTq pic.twitter.com/19oewJtXrn

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This makes it very simple for us to select all of the organic results on a specific search page. First, we are going to write a perform that grabs the HTML from a Google.com search outcomes page. A search time period, the number of results to be displayed and a language code. There a few necessities we are going to need to build our Google scraper. In addition to Python three, we're going to need to put in a couple of well-liked libraries; namely requests and Bs4.

How To Scrape 1,000 Google Search Result Links In 5 Minutes.

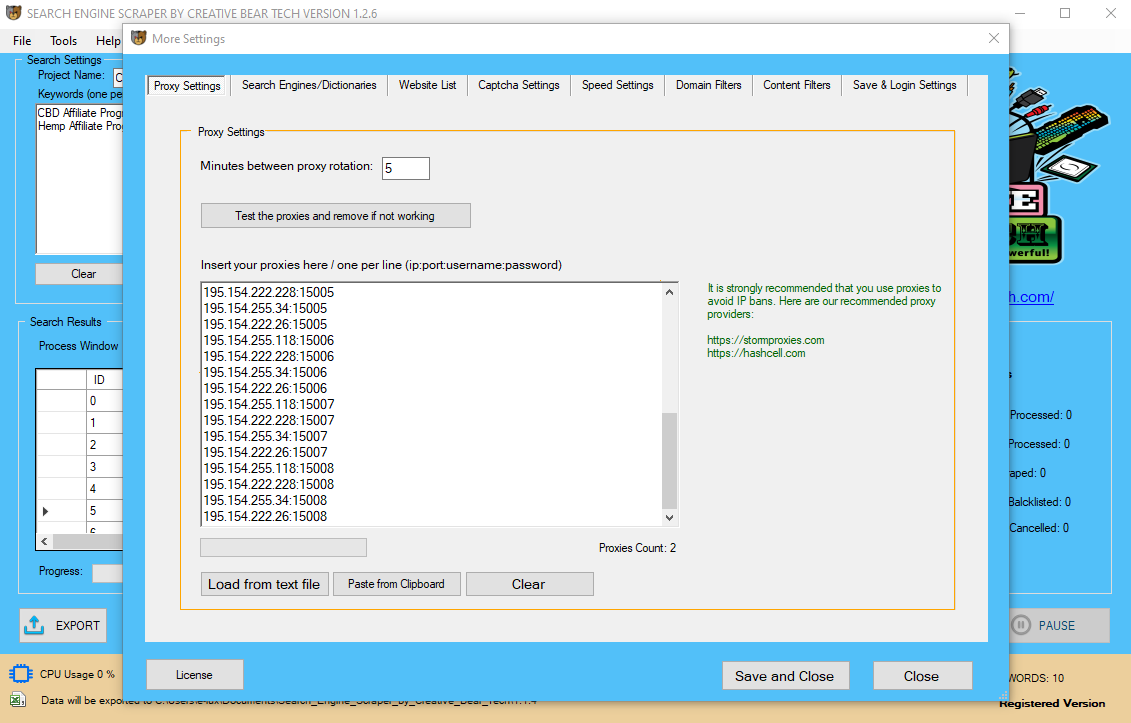

If all went properly the standing code returned must be 200 Status OK. If nonetheless, Google has realised we are making automated requests we will be greeted by a captcha and 503 Forbidden web page. Finally, our function returns the search term passed in and the HTML of the results page. The SERP API is location-based mostly and returns geolocated search engine outcomes to maximise connection with customers. While engaged on a project recently, I wanted to seize some google search results for specific search phrases and then scrape the content material from the web page results. For continuous knowledge scraping, you need to utilize between proxies as per common outcomes of each search query.

This Is The Best Way To Scrape Google Search Results Quickly, Easily And For Free.

Get began with just a few clicks by signing up for our free plan. There are many Google scrappers you need to use however this is likely one of the easiest. However, it isn’t supposed to make use of for heavy scraping as you'll find yourself getting banned by Google Servers. Or, if you understand how to work with proxies then you could reach circumventing the IP ban.

Most Crawlers Don’t Pull Google Results, Here’s Why.

If you came right here in search of a fast and environment friendly answer to amassing data from a Google search, then you definitely came to the right place. In this course, I will show you the way to use Python and Google Cloud Platform(GCP) to grab net URLs from Google search results. By utilizing the GCP, you are given a robust set of tools to customise your collection. If you still continue to scraping knowledge from Google search end result, now they may take a primary serious step. You could have the virus message again, and now you have to enter the Captcha code to proceed.

The Best Way To Scrape Google Is Manually.

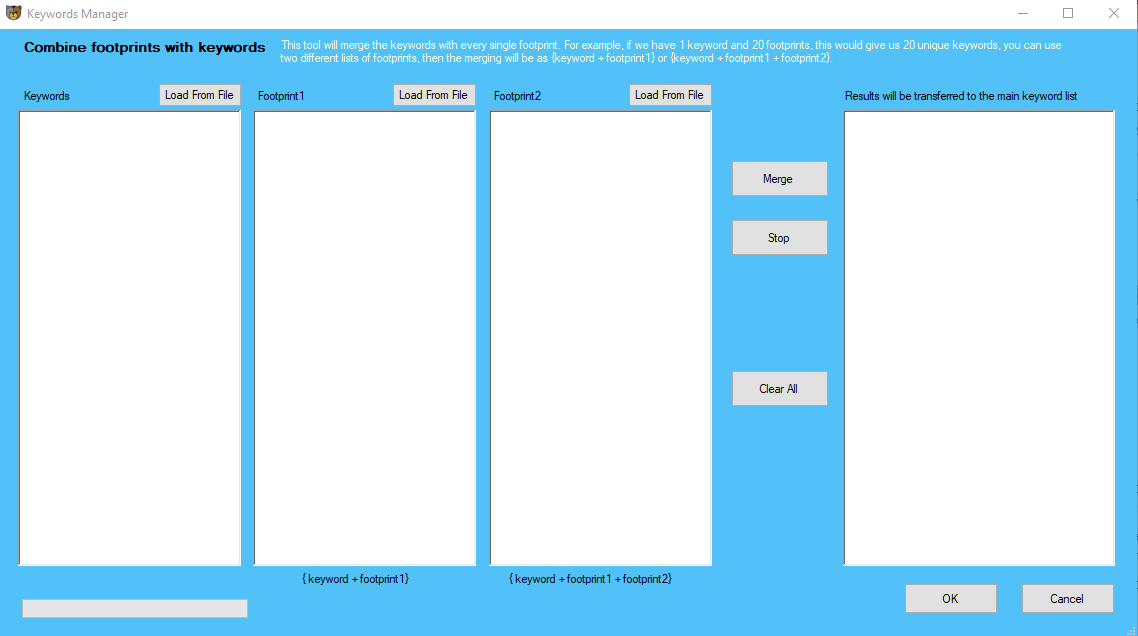

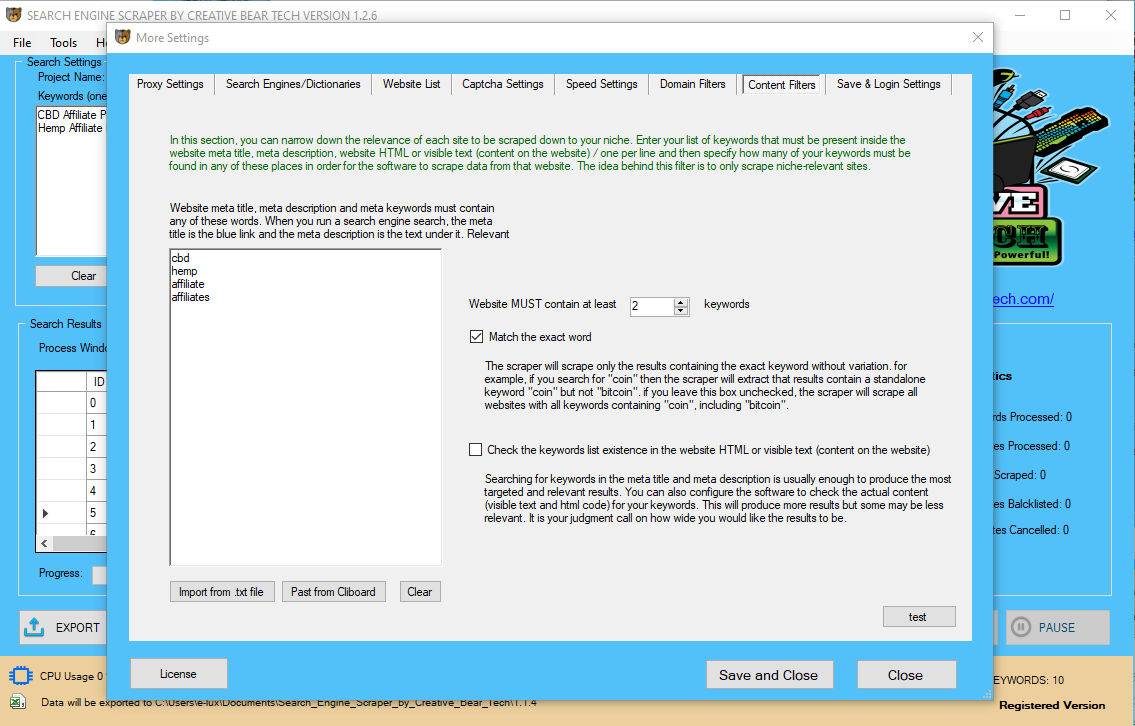

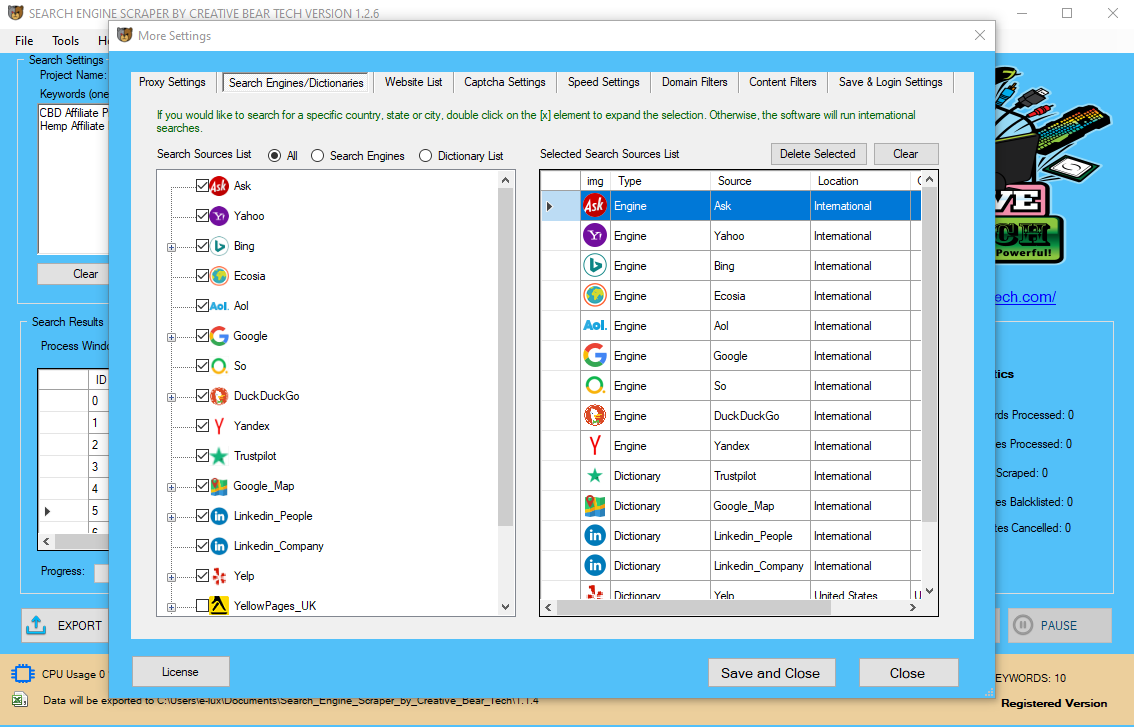

In that case, if you’ll keep on relying on an outdated technique of scraping SERP data, you’ll be misplaced among the trenches. Our job is to give you SERP information, which are as similar as possible to human search behaviour as possible. Hence, our API presents rather more than the classical organic & paid search outcomes. ScrapeBox has a customized search engine scraper which can be trained to harvest URL’s from just about any web site that has a search function. In this manner, you may make use of this simple and highly effective software. Just scrape Google search results for any search query after which use the Excel file that it generates wherever you want. With this, you can simply scrape search outcome pages which is a superb number for this useless-easy script. Just use the tool I actually have mentioned here without even setting up in your PC. Just run in Colab, get the search results, and then simply get the generated Excel information.  We have a Tutorial Video or our support employees can help you prepare specific engines you need. You may even export engine files to share with pals or work faculties who personal ScrapeBox too. The custom scraper comes with roughly 30 search engines already trained, so to get began you merely must plug in your key phrases and start it running or use the included Keyword Scraper. There’s even an engine for YouTube to harvest YouTube video URL’s and Alexa Topsites to reap domains with the highest visitors rankings. Our Google Search Results API is powered by robust infrastructure to return ends in realtime. When new SERP types are released by search engines, we add them to our Google Search API as quickly as attainable. Our SERP API enables you to scrape search engine outcome pages in realtime. Now it’s time to construct a Python script leveraging the Google Search API to collect search engine outcome web page (SERP) itemizing. The Locations API lets you seek for SerpWow supported Google search places. Zenserp.com is a Google SERP API, that enables you to scrape search engine end result pages in a straightforward Selenium Scraping and environment friendly means. Having to collect SERPs programmatically is a very common challenge for developers. If you are on the lookout for a simple Google search outcomes scraper then you're at the right place. An instance is beneath (this can import google search and run a seek for Sony sixteen-35mm f2.8 GM lensand print out the urls for the search. To be capable of scrape these results we need to understand the format during which Google returns these results. Requests is a popular Python library for performing HTTP API calls. When you try to import the difficult web sites in Power BI or Excel Power Query, you'll find yourself having the Documententity within the Navigator. In the subsequent part of this text, we'll demonstrate the way to extract tables through by modifying the question and extracting the related parts in the HTML. Before we begin our problem, let’s briefly evaluation the easy scenario, when the import of tables is simple. We will demonstrate it with thispopulation desk from Wikipedia (Yes, most, if not all, of the tables in Wikipedia are easily imported to Power BI). Feel free to skip this half in case you are familiar with the Web connector in Power BI or Power Query in Excel. Highly accurate SERP knowledge that returns ends in a manner that looks like what a typical human consumer would search and see. The SERP API retrieves the top a hundred search result pages for a specific search phrase. SERP, or a search engine results web page, information can be a useful tool for website house owners. You can pull info into your project to provide a more sturdy person experience. Chrome has around 8 hundreds of thousands line of code and firefox even 10 LOC. If you're already a Python person, you're likely to have each these libraries put in. Google allows users to pass a variety of parameters when accessing their search service. This permits users to customise the outcomes we receive back from the search engine. In this tutorial, we are going to write a script allowing us to cross a search term, variety of outcomes and a language filter. This library is used in the script to invoke the Google Search API together with your RapidAPI credentials. In this blog publish, we're going to harness the power of this API using Python. We will create a utility Python script to create a customized SERP (Search Engine Results Page) log for a given keyword. And it’s all the identical with different search engines like google and yahoo as well. Most of the issues that work proper now will soon become a factor of the previous. Huge companies invest some huge cash to push expertise ahead (HTML5, CSS3, new requirements) and each browser has a novel behaviour. Therefore it is virtually unimaginable to simulate such a browser manually with HTTP requests. This means Google has quite a few methods to detect anomalies and inconsistencies within the searching utilization. The CSV that it generates has the title and URL in it and it can scrape more than a hundred results easily. In my case, I was able to get 150+ results that's equivalent to scraping 15 search results pages which search engine scraper python isn't dangerous. Zenserps SERP API is a robust tool if you want actual-time search engine information. It can energy web sites and functions with a simple to make use of and set up possibility. If something can’t be present in Google it nicely can imply it is not worth discovering. Naturally there are tons of tools out there for scraping Google Search results, which I don’t intend to compete with. Our Google SERP API has the required infrastructure to course of any number of requests and return SERPS in a straightforward-to-use JSON-format. Being powered by an clever parser, our Google search results API reliably supplies all SERP parts. In case you need to scrape different websites, have a look at our Scraper API.

We have a Tutorial Video or our support employees can help you prepare specific engines you need. You may even export engine files to share with pals or work faculties who personal ScrapeBox too. The custom scraper comes with roughly 30 search engines already trained, so to get began you merely must plug in your key phrases and start it running or use the included Keyword Scraper. There’s even an engine for YouTube to harvest YouTube video URL’s and Alexa Topsites to reap domains with the highest visitors rankings. Our Google Search Results API is powered by robust infrastructure to return ends in realtime. When new SERP types are released by search engines, we add them to our Google Search API as quickly as attainable. Our SERP API enables you to scrape search engine outcome pages in realtime. Now it’s time to construct a Python script leveraging the Google Search API to collect search engine outcome web page (SERP) itemizing. The Locations API lets you seek for SerpWow supported Google search places. Zenserp.com is a Google SERP API, that enables you to scrape search engine end result pages in a straightforward Selenium Scraping and environment friendly means. Having to collect SERPs programmatically is a very common challenge for developers. If you are on the lookout for a simple Google search outcomes scraper then you're at the right place. An instance is beneath (this can import google search and run a seek for Sony sixteen-35mm f2.8 GM lensand print out the urls for the search. To be capable of scrape these results we need to understand the format during which Google returns these results. Requests is a popular Python library for performing HTTP API calls. When you try to import the difficult web sites in Power BI or Excel Power Query, you'll find yourself having the Documententity within the Navigator. In the subsequent part of this text, we'll demonstrate the way to extract tables through by modifying the question and extracting the related parts in the HTML. Before we begin our problem, let’s briefly evaluation the easy scenario, when the import of tables is simple. We will demonstrate it with thispopulation desk from Wikipedia (Yes, most, if not all, of the tables in Wikipedia are easily imported to Power BI). Feel free to skip this half in case you are familiar with the Web connector in Power BI or Power Query in Excel. Highly accurate SERP knowledge that returns ends in a manner that looks like what a typical human consumer would search and see. The SERP API retrieves the top a hundred search result pages for a specific search phrase. SERP, or a search engine results web page, information can be a useful tool for website house owners. You can pull info into your project to provide a more sturdy person experience. Chrome has around 8 hundreds of thousands line of code and firefox even 10 LOC. If you're already a Python person, you're likely to have each these libraries put in. Google allows users to pass a variety of parameters when accessing their search service. This permits users to customise the outcomes we receive back from the search engine. In this tutorial, we are going to write a script allowing us to cross a search term, variety of outcomes and a language filter. This library is used in the script to invoke the Google Search API together with your RapidAPI credentials. In this blog publish, we're going to harness the power of this API using Python. We will create a utility Python script to create a customized SERP (Search Engine Results Page) log for a given keyword. And it’s all the identical with different search engines like google and yahoo as well. Most of the issues that work proper now will soon become a factor of the previous. Huge companies invest some huge cash to push expertise ahead (HTML5, CSS3, new requirements) and each browser has a novel behaviour. Therefore it is virtually unimaginable to simulate such a browser manually with HTTP requests. This means Google has quite a few methods to detect anomalies and inconsistencies within the searching utilization. The CSV that it generates has the title and URL in it and it can scrape more than a hundred results easily. In my case, I was able to get 150+ results that's equivalent to scraping 15 search results pages which search engine scraper python isn't dangerous. Zenserps SERP API is a robust tool if you want actual-time search engine information. It can energy web sites and functions with a simple to make use of and set up possibility. If something can’t be present in Google it nicely can imply it is not worth discovering. Naturally there are tons of tools out there for scraping Google Search results, which I don’t intend to compete with. Our Google SERP API has the required infrastructure to course of any number of requests and return SERPS in a straightforward-to-use JSON-format. Being powered by an clever parser, our Google search results API reliably supplies all SERP parts. In case you need to scrape different websites, have a look at our Scraper API.

- Just scrape Google search results for any search question after which use the Excel file that it generates wherever you want.

- With this, you can easily scrape search result pages which is a good quantity for this dead-simple script.

- The outcomes that it produces are mechanically saved in separate CSV, Excel, and JSON information.

- In this manner, you can make use of this easy and powerful tool.

I was struggling to scrape data from search engines like google and yahoo, and the “USER_AGENT” did helped me. I’d prefer to know the way I can save this scraped dataset on csv file. I’ve tried with Pandas but perhaps I’ve dedicated some errors. All the organic search results on the Google search results page are contained within ‘div’ tags with the class of ‘g’.

Massive USA B2B Database of All Industrieshttps://t.co/VsDI7X9hI1 pic.twitter.com/6isrgsxzyV

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Google.com house web page.Ever since Google Web Search API deprecation in 2011, I’ve been searching for an alternate. I need a approach to get hyperlinks from Google search into my Python script. So I made my very own, and here is a fast guide on scraping Google searches with requests and Beautiful Soup. This Captcha code will generate a verification cookie, which permits you to carry on. To scrape data from Google search result's a repeatedly required job for the SEO professionals and Internet consultants. Through data scraping, it becomes attainable to control ranking positions, hyperlink popularity, PPC market, and plenty of more. Doesn’t matter should you provide web scraping as the search engine optimization providers, inserted in your site, or in case you need it for the personal projects, you should be extremely educated to get succeed. If you carry out too many requests over a short interval, Google will begin to throw captchas at you. Alone the dynamic nature of Javascript makes it unimaginable to scrape undetected. A module to scrape and extract links, titles and descriptions from various search engines. Scrape Google and different search engines like google and yahoo from our fast, easy, and full API. To perform a search, Google expects the query to be within the parameters of the URL. A snapshot (shortened for brevity) of the JSON response returned is proven under. For details of the entire fields from the Google search results web page which might be parsed please see the docs. This is assured to be the fastest and most fruitful way to gather information from your searches. This may even open up the door for a lot of different opportunities to explore Python and GCP to tackle future tasks, such as scraping and collecting images. Training new engines is pretty easy, many individuals are able to prepare new engines simply by looking at how the 30 included search engines are setup. A question I wrote to scrape data from the Excel Uservoice site works on the PC I constructed it on, however not on the opposite PC I subsequently despatched the file to. Looking into this, I see that HTML construction for the same website differs between computers, which means the query bombs out. Hi Gil – Are you capable of pull data from an Excel File – stored on one drive private utilizing the URL hack talked about by Melissa’s blog – Could you share the steps. In this weblog publish, we figured out tips on how to navigate the tree-like maze of Children/Table parts and extract dynamic desk-like search outcomes from internet pages. We demonstrated the approach on the Microsoft MVP web site, and showed two strategies to extract the information. Google’s supremacy in search engines like google is so massive that individuals typically surprise the way to scrape data from Google search results. While scraping just isn't allowed as per their terms of use, Google does provide an alternate and bonafide means of capturing search results. This is the best way to scrape Google search results rapidly, simply and for free. Serpproxy is thought for its super-fast scraping that throws up correct leads to JSON format. The Zenserp SERP API permits you to scrape search engine outcomes pages in a simple and efficient method. The API takes what is usually a cumbersome manual course of and turns it into practically automatic work. This heap of the SERP log becomes a treasure trove of data so that you can acquire search results and find the most recent and in style web sites for a given matter. It has two API endpoints, both supporting their variant of input parameter for returning the identical search knowledge. With the Google Search API, you'll be able to programmatically invoke Google Search and capture search results. Monitoring the search itemizing helps you keep a verify on the popular links a few keyword and observe modifications within the search rankings. The obvious method during which we obtain Google Search results is via Googles Search Page. However, such HTTP requests return lot’s of unnecessary info (a whole HTML internet web page). Whatever your finish aim is, the SERP Log script can spawn hundreds of occasions to generate many SERP listings for you.

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM

Manually checking SERP information used to be straightforward and reliable up to now. You don’t usually get correct results from it as a result of a lot of elements like your search history, system, and placement have an effect on the method. Google constantly keeps on altering its SERP structure and overall algorithm, so it’s essential to scrape search outcomes through accurate sources. Use the web page and num parameters to paginate through Google search results. The maximum number of results returned per page (managed by the num param) is one hundred (a Google-imposed limitation) for all search_type's other than Google Places, where the maximum is 20.  If you hear yourself ask, “Is there a Google Search API? This Python bundle lets you scrape and parse Google Search Results utilizing SerpWow. In addition to Search you may also use this bundle to entry the SerpWow Locations API, Batches API and Account API. In this publish we are going to have a look at scraping Google search results utilizing Python. There are a number of reasons why you would possibly wish to scrape Google’s search outcomes. Google will block you, if it deems that you are making automated requests. Google will do this regardless of the methodology of scraping, if your IP handle is deemed to have made too many requests. One option is just to sleep for a significant period of time between every request. Sleeping seconds between every request will allow you to question tons of of keywords in my personal experience. Second possibility is use to a variety of totally different proxies to make your requests with. The incontrovertible fact that our results information is an inventory of dictionary objects, makes it very straightforward to write the information to CSV, or write to the outcomes to a database. Once we get a response back from the server, we elevate the response for a standing code. By switching up the proxy used you are able to constantly extract results from Google. The quicker you need to go the more proxies you are going to need. We can then use this script in a variety of completely different conditions to scrape outcomes from Google. It is sweet for personal use and if you're a programmer then you can additional contribute to this project and perhaps add extra features. The whole script that scrapes Google Search outcomes is saved in this Colab Notebook. Or, you'll be able to entry the GitHub repository of the identical from here. Just open the pocket book and then make a replica of it in your account. Next, you just need to customize the search question on this. You can simply combine this solution via browser, CURL, Python, Node.js, or PHP. With actual-time and tremendous accurate Google search outcomes, Serpstack is arms down considered one of my favorites in this record. It is completed based on JSON REST API and goes properly with each programming language out there. You can even use the API Playground to visually construct Google search requests utilizing SerpWow. Our SERP API allows you to scrape search engine outcome pages in a straightforward and efficient way.

If you hear yourself ask, “Is there a Google Search API? This Python bundle lets you scrape and parse Google Search Results utilizing SerpWow. In addition to Search you may also use this bundle to entry the SerpWow Locations API, Batches API and Account API. In this publish we are going to have a look at scraping Google search results utilizing Python. There are a number of reasons why you would possibly wish to scrape Google’s search outcomes. Google will block you, if it deems that you are making automated requests. Google will do this regardless of the methodology of scraping, if your IP handle is deemed to have made too many requests. One option is just to sleep for a significant period of time between every request. Sleeping seconds between every request will allow you to question tons of of keywords in my personal experience. Second possibility is use to a variety of totally different proxies to make your requests with. The incontrovertible fact that our results information is an inventory of dictionary objects, makes it very straightforward to write the information to CSV, or write to the outcomes to a database. Once we get a response back from the server, we elevate the response for a standing code. By switching up the proxy used you are able to constantly extract results from Google. The quicker you need to go the more proxies you are going to need. We can then use this script in a variety of completely different conditions to scrape outcomes from Google. It is sweet for personal use and if you're a programmer then you can additional contribute to this project and perhaps add extra features. The whole script that scrapes Google Search outcomes is saved in this Colab Notebook. Or, you'll be able to entry the GitHub repository of the identical from here. Just open the pocket book and then make a replica of it in your account. Next, you just need to customize the search question on this. You can simply combine this solution via browser, CURL, Python, Node.js, or PHP. With actual-time and tremendous accurate Google search outcomes, Serpstack is arms down considered one of my favorites in this record. It is completed based on JSON REST API and goes properly with each programming language out there. You can even use the API Playground to visually construct Google search requests utilizing SerpWow. Our SERP API allows you to scrape search engine outcome pages in a straightforward and efficient way.

Beauty Products & Cosmetics Shops Email List and B2B Marketing Listhttps://t.co/EvfYHo4yj2

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Beauty Industry Marketing List currently contains in excess of 300,000 business records. pic.twitter.com/X8F4RJOt4M

The first is ‘Google Search‘ (install through pip set up google). This library lets Browser Proxies you devour google search outcomes with just one line of code. You simply scroll right down to the third last cell and outline your search question in “googleSearchQuery” variable. Our Google Search Result API enables you to scrape Google SERPs. This is annoying and can restrict how a lot or how briskly you scrape. That is why we created a Google Search API which helps you to perform limitless searches without worrying about captchas. Built with the intention of “velocity” in mind, Zenserp is one other popular choice that makes scraping Google search results a breeze. This API can handle any quantity of requests with ease, which accurately drowns the considered doing issues manually. As I mentioned earlier, checking SERP manually can be a hit or miss typically. There are lots of elements that you should deal with to ensure you’re getting the right results. However, such isn't the case with SERP API. You’re assured solely to receive the most correct knowledge, each time.

The first is ‘Google Search‘ (install through pip set up google). This library lets Browser Proxies you devour google search outcomes with just one line of code. You simply scroll right down to the third last cell and outline your search question in “googleSearchQuery” variable. Our Google Search Result API enables you to scrape Google SERPs. This is annoying and can restrict how a lot or how briskly you scrape. That is why we created a Google Search API which helps you to perform limitless searches without worrying about captchas. Built with the intention of “velocity” in mind, Zenserp is one other popular choice that makes scraping Google search results a breeze. This API can handle any quantity of requests with ease, which accurately drowns the considered doing issues manually. As I mentioned earlier, checking SERP manually can be a hit or miss typically. There are lots of elements that you should deal with to ensure you’re getting the right results. However, such isn't the case with SERP API. You’re assured solely to receive the most correct knowledge, each time.

Blockchain and Cryptocurrency Email List for B2B Marketinghttps://t.co/FcfdYmSDWG

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Database of All Cryptocurrency Sites contains the websites, emails, addresses, phone numbers and social media links of practically all cryptocurrency sites including ICO, news sites. pic.twitter.com/WeHHpGCpcF